AI Hardware: How GPUs and TPUs Power Machine Learning

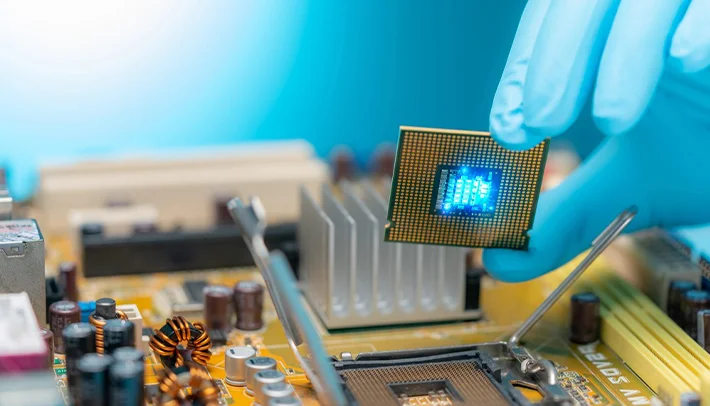

In a large data centre, machines hum with purpose. Rows of chips glow faintly as they conduct millions of calculations every second. There is no chaos, only rhythm—a steady heartbeat of artificial intelligence. Behind every smart assistant, every self-driving car, and every recommendation system lies a story of hardware designed for extraordinary speed. This is the story of how GPUs and TPUs became the muscle behind the mind of machine learning.

Before this new wave of intelligent systems, computers processed information very differently. Traditional CPUs were built to handle tasks one after another with careful precision—like expert craftsmen shaping one piece at a time. But modern AI demanded more data, more speed, and more complexity. Training a machine learning model means performing the same mathematical operations millions of times on massive datasets. CPUs simply could not keep up.

GPUs: Parallel Power for Deep Learning

Enter the GPU—originally built for rendering graphics in video games but destined for a greater purpose. Its architecture, powered by thousands of smaller cores, allowed it to process many tasks simultaneously. Imagine not one craftsman, but an entire workshop of skilled hands working together. This parallelism made GPUs ideal for deep learning, where enormous layers of neurons must process data at the same time.

TPUs: Hardware Built for Tensor Operations

As AI models grew larger and more demanding, engineers sought even more specialized hardware. This led to the creation of TPUs—tensor processing units. If GPUs are versatile multitaskers, TPUs are finely tuned instruments. They are built specifically for tensor operations, the fundamental mathematical patterns of machine learning. Their focus gives them remarkable efficiency in powering neural networks.

How GPUs and TPUs Shape AI Today

Speed and Parallelism: Both GPUs and TPUs handle countless calculations at once, making them ideal for training complex models faster than traditional processors.

Energy Efficiency: TPUs offer exceptional performance while consuming less energy for repetitive mathematical operations.

Scalability: Multiple GPUs or TPUs can be linked together, enabling massive models to train on enormous datasets simultaneously.

Specialised Architecture: Their internal designs support matrix operations, neural networks, and deep learning algorithms with precision and speed.

Continuous Innovation: Engineers continue to refine these chips—making them smaller, cooler, faster, and more powerful with each new generation.

The Challenges Behind the Power

This immense computing power comes at a cost. High-performance chips generate significant heat and consume large amounts of electricity. Researchers are exploring advanced cooling systems, better materials, and smarter algorithms to reduce energy waste and meet the rising demands of AI.

The Future of AI Hardware

The journey of AI hardware is far from over. With each new generation of chips, the limits of what machines can learn and how quickly they adapt continue to expand. Tomorrow’s breakthroughs in artificial intelligence may not come from software alone, but from deep within the glowing cores of the hardware that powers it. Inside every chip, the future of intelligence unfolds—one calculation at a time.